EDITORIAL. NAVIGATING THE HUMAN

Florian Hadler & Daniel Irrgang

“The manner in which human sense perception is organized, the medium in which it is accomplished, is determined not only by nature but by historical circumstances as well.”

– Walter Benjamin, The work of art in the age of mechanical reproduction, 1935.

Humans design technology, and technology shapes what it means to be human. That is old news, at least for media theory, Science and Technology Studies and a couple of other disciplines. The story goes from Freudian wax scrapings of the antique to Nietzsche’s pen, from Heidegger’s radio to Kittler’s typewriter. Technology does not only refer to functional instruments, it has existential dimensions. It shapes our cognition, bodies and social relations. Technology has a culturing effect – it informs and reformulates our perception of the world and of each other. In fact, culture and technology are interdependent.1 But while this basic insight into the effects and implications of technologies and their culturing effects is well established within certain areas of theoretical discourse, it is certainly not well understood by people and organizations who actually shape technology today. From a tech and engineering perspective, the human is conceived as programmable. Technology provides the gentle means by which the human can be navigated. And technology, in this perspective, does not have an agency of its own – it is rather an instrument for the cultivation of the human. And the cultivation of the human is best achieved through the navigation and design of human behaviour.

The subject of behavioural design became prominent in interface discourse and practice in recent decades. It is now visible with the widespread application of nudging mechanisms and dark patterns2 that emerged from the behavioural and persuasive technology labs at Stanford and elsewhere from the late 1990s onwards.3 These developments in the context of the so-called human-centred design paradigm did not come out of nowhere.

In the middle of the twentieth century a shift in the relationship between humans and their technological artefacts occurred. The machines, whose inner mechanical organs and operation principles could still be observed by the naked eye and understood by the observer, were now, in various fields of society, slowly replaced by apparatuses. The apparatus is an opaque black box, in the cybernetic sense of the term, whose “inner” functional principles are not only out of sight, hidden under operational surfaces such as control panels, but also characterized by a high degree of structural complexity.4 Thus, the operator of the apparatus would rather focus on the operational modes of its surface than aim at an understanding of its deeper functional principles. Today, these functional principles are completely out of reach, hidden in well-guarded data centres and compiled within inaccessible source codes, secured by terms of services and cloud infrastructures.

This shift in the human−technology relationship – from a structural to a functional understanding, from access to inner processes to surface operations – was certainly accelerated by the intensification of military research during the Second World War, followed by the technological race of the Cold War. US research took a leading role, funding large programmes that then turned into the cradle of what is now called the tech sector of Silicon Valley.5 The focus of technology development shifted: from the invention of tools or equipment in service of a human operator to the design of “man-machine units”,6 where “human engineering”7 plays a role similar to the engineering of technology. This shift was not limited to military research, as historiographies of computing tend to construct. Academic research in ergonomics quickly spread to the general industry, where, to quote a contemporary observation, “the emphasis [was] shifting from the employment of men who were ‘doers’ to men who are ‘controllers’”.8

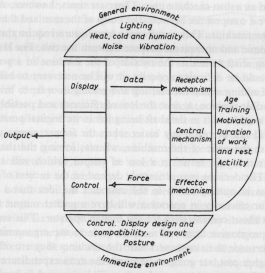

A landmark in the investigation of human factors in industries was the foundation of the Human Research Society in Oxford in 1949, soon renamed the Ergonomics Research Society in 1950.9 One of its founding members, the British psychologist K. F. Hywel Murrell, published the seminal work Ergonomics. Man in his Working Environment in 1965.10 Focusing on the efficiency of work processes, studies in ergonomics should “enable the cost to the individual to be minimized” and thereby make a “contribution not only to human welfare but to the national economy as a whole“.11 Murrell’s description of the cognitive and material task of operating equipment as a “closed loop system”, in which the operator “receives and processes information”,12 was obviously influenced by contemporary discourses of behaviourism and cybernetics. It’s input− output logic is illustrated in Figure 1 of Murrell’s book, which also provides a symbolic form for ergonomics research of the time: the displayed data evoke a feedback loop with the control system, while operator and machine – which are not separated in the diagram – constitute the functional parts of the system. Consequently, its caption does not locate the operator but rather describes “Man as a component in a closed loop system”.13

Suggested citation: Hadler, Florian & Irrgang, Daniel (2019). “Editorial. Navigating the Human.” In: Interface Critique Journal 2. Eds. Florian Hadler, Alice Soiné, Daniel Irrgang.

DOI: 10.11588/ic.2019.2.67261

This article is released under a

Creative Commons license (CC BY 4.0).

Fig. 1: K. F. Hywel Murrell, Ergonomics. Man in his Working Environment (London and New York: Chapman and Hill, 1986), p. xv.

Here, the human actor is reduced to a mere functional dimension in a system of production. And although Murrell’s book aims, at first glance, at a socially sustainable relationship between human and machine – including implications for welfare and better working conditions – it soon becomes clear that it is the efficiency of the interaction of man and machine, maximising productivity, which is at stake here: “To achieve the maximum efficiency, a man-machine system must be designed as a whole, with the man being complementary to the machine and the machine being complementary to the abilities of the man.”14

These ergonomic endeavours are the predecessors of what has been, in the last two to three decades, termed behavioural programming and persuasive technology: the design of human behaviour in technological settings. What does this imply? At least two things. For one, the user is turned into a lab rat, with every moment of the screen flow and user journey labyrinths measured, cross-referenced, tracked and translated back into key performance indicators (KPIs) optimisation procedures. Secondly, the designer merely executes the endless results of A/B testings and optimisation funnels. Design basically disappears and dissolves into modular templates. Both human sides of the interface – usage and production – become mere functions of the apparatus, generating data and executing data-driven design decisions.

While this approach is still very much in play and still generates increasing revenues for the big platforms, their mechanisms are no longer a secret. And once they are revealed, their effects are slowly rendered ineffective – as with any magic spell. And there is a tendency that at least some part of us users becomes aware of the conditions and contingencies of the apparatuses around us. Which gives us the chance to rediscover the human factor in the interface.

However, we have to consider that, among other things, the human is – and always has been – a political and ideo- logical tool. The human is – to rephrase Giorgio Agamben – not an event that has been completed once and for all, but an occurrence that is always under way.15 It has been used to humanise and dehumanise, to justify hierarchies and exclusion. Or – as it is done today – to turn business practices into corporate prophecies.16 Referring to a human nature, or an evolutionary human destiny even, seems like the last resort of a tech ecosystem slowly realising its hubris. This specific view of the human as something to be reformed through technology drives both the protagonists and antagonists of the tech sector. The so-called tech humanism or transhumanism, which is currently receiving widespread recognition through prominent entrepreneurs turned saviours of humanity and having second thoughts about their unicorn past, derives from the same notion of the human as universal man:17 the perfect user, who aligns intentional technology and selfmastery, using the phone as a body-tool, combining wellness culture with self-quantification, just as Silicon Valley amalgamated military research with the subculture countermovement into one coherent Californian Ideology.18 And of course they all still meet at Burning Man for some quality screen-free time.

These ideological and esoteric underpinnings of technological progressivism are more visible now than they were five years ago. Not everything is within reach, not everything can be put into the cloud, not everything gets better when it is connected, the world is not as whole as the famous photograph of the “blue marble” suggests, impact and disruption is not a value in itself. The question arises: what was the Silicon Valley?19 And while some of the founding fathers of the Californian Ideology are still alive, we witness critical retrospectives,20 musealisations and the shattering and tragic downfall of tech stars.21 Corporate techno-utopias become shallow, as their inherent paradoxes and contradictions become more and more obvious. Numerous interconnected phenomena in different domains add to this situation. On the interface level we witness the incapacitation of the designer through data-driven conversion funnel optimisation, leading to horrible but economically efficient websites and services. On the consumer side, we monitor elevated usage conventions regarding social media and other digital means of communication, undermining intended-use cases and posing threats to liability. In technology development we see decreasing innovation in consumerfacing technologies, most visible in the saturated global smartphone penetration. In the investment domain we have record-breaking IPOs by non-profitable businesses22 and the domination of innovation through big platforms that are older than a decade, hoovering up or copying all innovation.23 In the business model domain, we see rising problems of advertising-based business models and related ad-fraud.24 In the political domain we experience the vulnerability of democratic processes through micro-targeting, the automatic promotion of highly engaging extremist content through self-learning algorithms25 and the critical examination of monopolisation effects of major platforms, with harsher regulations on the horizon.26

But technology of course still continues to navigate the human. Suggestions on where to go, what to do and what to watch, either made by looking at the stars or by following data-driven recommendations from the clouds, all add to the same attractive promise: a light and effortless being in the world. Technology’s expansion of human capacities and bodily functions, its most important promise in the last couple of thousand years, is now joined by the promise of the expansion of mental capabilities, delegating orientation and decision-making to a technological surrounding, saturated with data from our very own behaviour.

If we look at the history of interfaces, of design and of technology in general, it becomes clear: technology is genuinely fluid. It morphs and curves itself into novel usages and shapes social grammars. It is constantly de-scripted and re-scripted by social use, with endless processes of appropriation, translation and adaptive innovation. And it is obviously inseparable from the human. After all, it might very well be what makes us human (or post-human, for that matter) – we have always been cyborgs.27 And just as the human is always under way, technology remains ingrained in every step and every shape. Fortunately, both are never quite what they claim to be.28

As Vilém Flusser provocatively stated: “We can design our tools in such a way that they affect us in intended ways.”29 Rather than be integrated as a systemic element in functionalistic interface paradigms, the human factor in technology should be conceptualised as a resistant momentum of subjectification, of that which remains unknown. How can we design interfaces that are open to this unknown, that create openness and opportunities for self-realisation and autonomous authorship? How can interfaces enable diversity, heterogeneity and difference? How can we conceive of the user and usage as the unknown, the unfinished, the infinite?

If technology does indeed have theological dimensions,30 maybe the designers, producers, developers and users should not focus so much on unity, cult and following, but rather on the infinite and the unknown. They should focus on that which is the basic foundation of all religion and mysticism – and apparently also of technology: the transcendence of the human.31 Starting from there, let’s try to rethink what it means to navigate the human. It might have a lot to do with infinity and openness, and not so much with predictive algorithms, satellite imagery and patronising affordances.

ACKNOWLEDGEMENTS

One of the main motivations for this journal is the facilitation of an interdisciplinary platform, bridging gaps between arts, sciences and technology. We initiate dialogues about genealogies, current states and possible futures of apparatuses and applications. We are convinced that the complexity of our technological surroundings requires a variety of perspectives. Such perspectives are not only directed forward, but are also engaged undawith the past, reconstructing alternative histories of man−machine relations, which then, again, can be projected as multifarious future possibilities.32

We are proud to contribute to a discourse that is currently gaining traction. A traction that can be observed in the rising number of workshops, conferences, exhibitions and publications on topics related to Interface Critique. To include as many perspectives as possible, we have thus integrated numerous new formats: the single topic special section presents the results of the workshop “Interfaces and the Post-Industrial Society”, which was part of the annual conference of the German Society for Media Studies. Furthermore, we have included a series of explorative photographs from the archive of the Berlin-based artist Armin Linke, dealing with technological surfaces of interaction and control. We also introduce alternative forms of textual contributions, such as reports on individual artistic practices (Darsha Hewitt, Mari Matsutoya & Laurel Halo) and interviews (a conversation between Katriona Beales and William Tunstall-Pedoe).

This second volume of Interface Critique would not have been possible without a variety of supporters, both individuals and institutions. We are indebted to Frieder Nake for his permission to translate and republish an article for Kursbuch from 1984. In this context, we would further like to thank Mari Matsutoya for the translation of Frieder Nake’s text as well as the Centre for Art and Media Karlsruhe (ZKM), especially Margit Rosen, for the funding of the translation. For the permission to republish Mari Matsutoya’s and Laurel Halo’s text we are thankful to the authors and artists involved as well as to the editors of After Us, where the text was first published in 2017. We also thank Filipa Cordeiro for the permission to republish her text, which first appeared in Wrong Wrong Magazine in 2015. We are indebted to Armin Linke for providing us access to his archive and for his permission to use a hand-picked series of his photographs for this issue. Our gratitude goes to AG Interfaces, a group of the German Society for Media Studies (GfM), which contributed the results of their workshop “Interfaces and the Post-Industrial Society”. We thank Katriona Beales, William Tunstall-Pedoe and Irini Papadimitrou for the permission to republish a conversation along with the accompanying artwork. We also thank Anthony Masure for the translation of his text “Manifeste pour un design acentré” into English. We are indebted to Alexander Schindler, who supported us generously with an InDesign template suited to our needs, thereby significantly lightening the editorial process. We gratefully acknowledge the Centre for Interdisciplinary Methods (CIM) at Warwick University, especially Nathaniel Tkacz and Michael Dieter, for facilitating a fruitful workshop on Interface Criticism, generating valuable contacts and conversations. A special thanks goes to our publisher, arthistoricum.net, and the Heidelberg University Press, especially Frank Krabbes, Daniela Jakob, Anja Konopka and everyone else who has been involved, for their ongoing generous support and access to the Open Journal System. And we thank Olia Lialina, Martin Fritz and the Merz Akademie Stuttgart for their financial support of this volume and their invitation in 2018 to present our project at the Stadtbibliothek Stuttgart. Last but not least we thank all authors – your work is the core of this project and this journal would obviously not be possible without you.

Looking forward to the next volume.

– Berlin, October 2019

References

Agamben, Giorgio, The Open: Man and Animal (Stanford: Stanford University Press, 2003).

Barbrook, Richard, and Andy Cameron, The Californian Ideology. Science as Culture 6/1 (1996), pp. 44–72.

Benjamin, Walter, The work of art in the age of mechanical reproduction, in: Illuminations, ed. Hannah Arendt (New York: Schocken Books, 1969).

Browne, R. C., H. D. Darcus, C. G. Roberts, R. Conrad, O. G. Edholm, W. E. Hick, W. F. Floyd, G. M. Morant, H. Mound, K. F. H. Murrell and T. P. Randle, Ergonomics Research Society. British Medical Journal 1/4660 (1950), p. 1009.

Colomina, Beatriz, and Mark Wigley, Are We Human? Notes on an Archaeology of Design (Baden: Lars Müller, 2017).

Cusumano, Michael A., Annabelle Gawer and David B. Yoffie, The Business of Platforms: Strategy in the Age of Digital Competition, Innovation, and Power (New York 2019).

Diederichsen, Diedrich, and Anselm Franke (eds.), The Whole Earth. California and the Disappearance of the Outside (Berlin: Sternberg Press, 2013).

Fisher, Max and Taub, Amanda, How YouTube Radicalized Brazil, The New York Times (August 2019) https://www.nytimes.com/2019/08/11/world/americas/youtube-brazil.html, access: October 10, 2019.

Flusser, Vilém, Vom Rückschlag der Werkzeuge auf das Bewusstsein (undated manuscript, Vilém Flusser Archive, document no. 2586).

Fogg, B.J., Persuasive technology: using computers to change what we think and do. Ubiquity Vol. 2002 Issue December (2002), pp. 89–120. DOI 10.1145/764008.763957

Galloway, Scott, WeWTF, Part Deux (September 2019), https://www.profgalloway.com/wewtf-part-deux, access: October 8, 2019.

Haupt, Joachim, Facebook Futures: Mark Zuckerbergs Discursive Construction of a better World. New Media and Society, in print.

Hayles, N. Katherine, How We Became Posthuman. Virtual Bodies in Cybernetics, Literature, and Informatics (Chicago: University of Chicago Press, 1999).

Hughes, Chris, It’s Time to break up Facebook. The New York Times (May 2019), https://www.nytimes.com/2019/05/09/opinion/sunday/chris-hughes-facebook-zuckerberg.html, access: October 8, 2019.

Kay, Alan C., User Interface. A Personal View, in: multiMEDIA. From Wagner to virtual reality, eds. Randall Packer and Ken Jordan (New York: W. W. Norton & Co., 2001), pp. 121–131.

Lacey, Cherie, Catherine Caudwell and Alex Beattie, The Perfect User. Digital wellness movements insist there is a single way to “stay human”. Real Life Magazine (September 2019), https://reallifemag.com/the-perfect-user/, access: October 8, 2019.

Liat, Berdugo, The Halos of Devices: The Neo-Nimbus of Electronic Objects (February 2019), http://networkcultures.org/longform/2019/02/21/the-halos-of-devices-the-neo-nimbus-of-electronic-objects/, access: October 8, 2019.

Mathur, Arunesh et al., Dark Patterns at Scale: Findings from a Crawl of 11K Shopping Websites. Proc. ACM Hum.-Comput. Interact. 3, CSCW, Article 81 (2019).

Moles, Abraham A., Informationstheorie und ästhetische Wahrnehmung (Cologne: M. DuMont Schauberg, 1971).

Motion for preliminary approval and notice of settlement, Case No. 4:16-cv-06232-JSW, filed on October 4, 2019, at the United States District Court for the Northern District of California, Oakland Division, available here: https://www.documentcloud.org/documents/6455498-Facebooksettlement.html, access: October 10, 2019.

Murrell, K. F. Hywel, Ergonomics. Man in his Working Environment (London and New York: Chapman & Hall, 1986).

Noble, David F., The Religion of Technology. The Divinity of Man and the Spirit of Invention (New York et al.: Penguin Books, 1999).

Stevens, Matt, Elizabeth Warren on Breaking Up Big Tech, The New York Times (June 2019). https://www.nytimes.com/2019/06/26/us/politics/elizabeth-warren-break-up-amazon-facebook.html, access: October 10, 2019.

Tkacz, Nathaniel, Facebooks Libra or the end of Silicon Valley Innovation. Medium (June 2019), https://medium.com/@nathanieltkacz/facebooks-libra-or-the-end-of-silicon-valley-innovation-9cb2d1539bcd, access: October 8, 2019.

Williams, Raymond, Television. Technology and Cultural Form (London: Fontana, 1974).

Footnotes

1 Raymond Williams, Television: Technology and Cultural Form (London 1974).

2 Arunesh Mathur et al., Dark patterns at scale: findings from a crawl of 11K shopping websites. Proc. ACM Hum.-Comput. Interact. 3, CSCW, Article 81 (2019).

3 See for example the influential paper from B.J. Fogg, Persuasive technology: using computers to change what we think and do. Ubiquity (December 2002), pp. 89–120.

4 This quasi-dialectical distinction has been coined by Vilém Flusser, which he in turn adapted from Abraham A. Moles’ pioneering work on information aesthetics. Cf. Abraham A. Moles, Informationstheorie und ästhetische Wahrnehmung (Cologne 1971).

5 The influence of ARPA-funded projects (Advanced Research Projects Agency, now called DARPA = Defense Advanced Research Agency) – as the driving force for the powerful cybernetic paradigm of the following decades – on developments in human computer interaction is well documented. In fact, Alan Kay, the main protagonist of GUI development at Xerox PARC in the 1970s, discussed research in aeronautics as the direct predecessor of research on computer interfaces. Cf. Alan C. Kay, User interface. A personal view, in: multiMEDIA. From Wagner to Virtual Reality, eds. Randall Packer and Ken Jordan (New York 2001), pp. 121–131.

6 K. F. Hywel Murrell, Ergonomics. Man in His Working Environment (London and New York 1986 [1965]), p. xvi.

7 Ibid., p. xiv.

8 Ibid., p. x.

9 Ibid., p. viii.

10 In their constitution, the Ergonomics Research Society stated their mission as “the study of the relation between man and his working environment”. R. C. Browne, H. D. Darcus, C. G. Roberts, R. Conrad, O. G. Edholm, W. E. Hick, W. F. Floyd, G. M. Morant, H. Mound, K. F. H. Murrell and T. P. Randle, Ergonomics Research Society. British Medical Journal 1/4660 (1950), p. 1009. Murrell adopted this mission statement for the title of his book. It is not only a valuable source for critical studies on the history of objectification of labour, where workers or operators and technological systems constitute ever effective units. It is also a necessary reference for a genealogy of the interface.

11 Murrell, Ergonomics, p. xiv.

12 Ibid., p. xiv.

13 Ibid., p. xv.

14 Ibid.

15 “Ontology, or first philosophy, is not an innocuous academic discipline, but in every sense the fundamental operation in which anthropogenesis, the becoming human of the living being, is realized. From the beginning, metaphysics is taken up in this strategy: it concerns precisely that meta that completes and preserves the overcoming of animal physis in the direction of human history. This overcoming is not an event that has been completed once and for all, but an occurrence that is always under way, that every time and in each individual decides between the human and the animal, between nature and history, between life and death.” Giorgio Agamben, The Open: Man and Animal (Stanford 2003), p. 79.

16 Joachim Haupt, Facebook futures: Mark Zuckerberg’s discursive construction of a better world. New Media and Society, in print.

17 Cherie Lacey, Catherine Caudwell and Alex Beattie, The perfect user. Digital wellness movements insist there is a single way to “stay human”. Real Life Magazine (September 2019), https:// reallifemag.com/the-perfect-user/, acces: October 8, 2019.

18 Richard Barbrook and Andy Cameron identified, already over 20 years ago, the “contradictory mix of technological determinism and libertarian individualism” as the main ingredient of the Californian Ideology. Cf. Richard Barbrook and Andy Cameron, The Californian Ideology. Science as Culture 6/1 (1996), pp. 44–72.

19 See for example: Nathaniel Tkacz, Facebook’s Libra, Or, the End of Silicon Valley Innovation. Medium (June 2019), https://medium. com/@nathanieltkacz/facebooks-libra-or-the-end-of-siliconvalley- innovation-9cb2d1539bcd, access: October 8, 2019.

20 Such as “The Whole Earth” exhibition at HKW Berlin (April 26–July 7, 2013); catalogue: The Whole Earth. California and the Disappearance of the Outside, eds. Diedrich Diederichsen and Anselm Franke (Berlin 2013).

21 While Theranos has been the most flamboyant example in recent years, there are many more, from Uber CEO Travis Kalanick to Twitter CEO Jack Patrick Dorsey and the former WeWork CEO Adam Neumann, who stepped down after an IPO filing that put the company in turmoil. And Mark Zuckerberg is obviously getting ready for some kind of major cathartic event.

22 WeWork is just the most recent example: Scott Galloway, WeWTF, Part Deux (September 2019), https://www.profgalloway. com/wewtf-part-deux, access: October 8, 2019.

23 Michael A. Cusumano, Annabelle Gawer and David B. Yoffie, The Business of Platforms: Strategy in the Age of Digital Competition, Innovation, and Power (New York 2019).

24 See for example the recent settlement, where Facebook Inc. agreed to pay $40 million to advertisers for the knowing inflation of video view statistics by more than 900%: Motion for preliminary approval and notice of settlement, Case No. 4:16-cv-06232-JSW, filed on October 4, 2019, at the United States District Court for the Northern District of California, Oakland Division, available here: https://www.documentcloud.org/documents/6455498-Facebooksettlement.html, access: October 10, 2019.

25 See for example: Max Fisher and Amanda Taub, How YouTube Radicalized Brazil. The New York Times (August 2019) https://www.nytimes.com/2019/08/11/world/americas/youtube-brazil.html, access: October 10, 2019.

26 Chris Hughes, It’s Time to Break Up Facebook. The New York Times (May 2019) https://www.nytimes.com/2019/05/09/opinion/ sunday/chris-hughes-facebook-zuckerberg.html, access: October 8, 2019. See also: Matt Stevens, Elizabeth Warren on Breaking Up Big Tech. The New York Times (June 2019). https://www.nytimes. com/2019/06/26/us/politics/elizabeth-warren-break-up-amazonfacebook.html, access: October 10, 2019. And, of course, the recent ECJ judgements on the liabilities of social media platforms.

27 See Julia Heldt’s article as well as Laurel Halo’s and Mari Matsutoya’s reflection of their project on Hatsune Miku in this volume. One of the central publications in this discourse is How We Became Posthuman. Virtual Bodies in Cybernetics, Literature, and Informatics (Chicago 1999) by N. Katherine Hayles, who provided a paper on her current research for this volume.

28 Paraphrased from Beatriz Colomina and Mark Wigley, Are We Human? Notes on an Archaeology of Design (Baden 2017), p. 274: “Design is never quite what it claims to be. Fortunately. Its attempt to smooth over all the worries and minimize any friction always fails, in the same way that almost every minute of daily life is organized by the unsuccessful attempt to bury the unconscious.”

29 Vilém Flusser, Vom Rückschlag der Werkzeuge auf das Bewusstsein (undated manuscript, Vilém Flusser Archive, document no. 2586); translation: the authors.

30 Liat Berdugo, The Halos of Devices: The Neo-Nimbus of Electronic Objects (February 2019), http://networkcultures.org/ longform/2019/02/21/the-halos-of-devices-the-neo-nimbus-ofelectronic-objects/, access: October 8, 2019.

EDITORIAL. NAVIGATING THE HUMAN

Florian Hadler & Daniel Irrgang

Suggested citation: Hadler, Florian & Irrgang, Daniel (2019). “Editorial. Navigating the Human.” In: Interface Critique Journal 2. Eds. Florian Hadler, Alice Soiné, Daniel Irrgang.

DOI: 10.11588/ic.2019.2.67261

This article is released under a

Creative Commons license (CC BY 4.0).

“The manner in which human sense perception is organized, the medium in which it is accomplished, is determined not only by nature but by historical circumstances as well.”

Humans design technology, and technology shapes what it means to be human. That is old news, at least for media theory, Science and Technology Studies and a couple of other disciplines. The story goes from Freudian wax scrapings of the antique to Nietzsche’s pen, from Heidegger’s radio to Kittler’s typewriter. Technology does not only refer to functional instruments, it has existential dimensions. It shapes our cognition, bodies and social relations. Technology has a culturing effect – it informs and reformulates our perception of the world and of each other. In fact, culture and technology are interdependent.1 But while this basic insight into the effects and implications of technologies and their culturing effects is well established within certain areas of theoretical discourse, it is certainly not well understood by people and organizations who actually shape technology today. From a tech and engineering perspective, the human is conceived as programmable. Technology provides the gentle means by which the human can be navigated. And technology, in this perspective, does not have an agency of its own – it is rather an instrument for the cultivation of the human. And the cultivation of the human is best achieved through the navigation and design of human behaviour.

The subject of behavioural design became prominent in interface discourse and practice in recent decades. It is now visible with the widespread application of nudging mechanisms and dark patterns2 that emerged from the behavioural and persuasive technology labs at Stanford and elsewhere from the late 1990s onwards.3 These developments in the context of the so-called human-centred design paradigm did not come out of nowhere.

In the middle of the twentieth century a shift in the relationship between humans and their technological artefacts occurred. The machines, whose inner mechanical organs and operation principles could still be observed by the naked eye and understood by the observer, were now, in various fields of society, slowly replaced by apparatuses. The apparatus is an opaque black box, in the cybernetic sense of the term, whose “inner” functional principles are not only out of sight, hidden under operational surfaces such as control panels, but also characterized by a high degree of structural complexity.4 Thus, the operator of the apparatus would rather focus on the operational modes of its surface than aim at an understanding of its deeper functional principles. Today, these functional principles are completely out of reach, hidden in well-guarded data centres and compiled within inaccessible source codes, secured by terms of services and cloud infrastructures.

This shift in the human−technology relationship – from a structural to a functional understanding, from access to inner processes to surface operations – was certainly accelerated by the intensification of military research during the Second World War, followed by the technological race of the Cold War. US research took a leading role, funding large programmes that then turned into the cradle of what is now called the tech sector of Silicon Valley.5 The focus of technology development shifted: from the invention of tools or equipment in service of a human operator to the design of “man-machine units”,6 where “human engineering”7 plays a role similar to the engineering of technology. This shift was not limited to military research, as historiographies of computing tend to construct. Academic research in ergonomics quickly spread to the general industry, where, to quote a contemporary observation, “the emphasis [was] shifting from the employment of men who were ‘doers’ to men who are ‘controllers’”.8

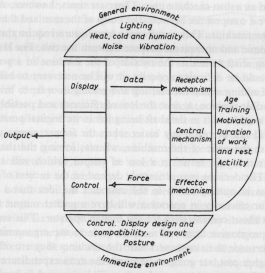

A landmark in the investigation of human factors in industries was the foundation of the Human Research Society in Oxford in 1949, soon renamed the Ergonomics Research Society in 1950.9 One of its founding members, the British psychologist K. F. Hywel Murrell, published the seminal work Ergonomics. Man in his Working Environment in 1965.10 Focusing on the efficiency of work processes, studies in ergonomics should “enable the cost to the individual to be minimized” and thereby make a “contribution not only to human welfare but to the national economy as a whole“.11 Murrell’s description of the cognitive and material task of operating equipment as a “closed loop system”, in which the operator “receives and processes information”,12 was obviously influenced by contemporary discourses of behaviourism and cybernetics. It’s input− output logic is illustrated in Figure 1 of Murrell’s book, which also provides a symbolic form for ergonomics research of the time: the displayed data evoke a feedback loop with the control system, while operator and machine – which are not separated in the diagram – constitute the functional parts of the system. Consequently, its caption does not locate the operator but rather describes “Man as a component in a closed loop system”.13

Fig. 1: K. F. Hywel Murrell, Ergonomics. Man in his Working Environment (London and New York: Chapman and Hill, 1986), p. xv.

Here, the human actor is reduced to a mere functional dimension in a system of production. And although Murrell’s book aims, at first glance, at a socially sustainable relationship between human and machine – including implications for welfare and better working conditions – it soon becomes clear that it is the efficiency of the interaction of man and machine, maximising productivity, which is at stake here: “To achieve the maximum efficiency, a man-machine system must be designed as a whole, with the man being complementary to the machine and the machine being complementary to the abilities of the man.”14 These ergonomic endeavours are the predecessors of what has been, in the last two to three decades, termed behavioural programming and persuasive technology: the design of human behaviour in technological settings. What does this imply? At least two things. For one, the user is turned into a lab rat, with every moment of the screen flow and user journey labyrinths measured, cross-referenced, tracked and translated back into key performance indicators (KPIs) optimisation procedures. Secondly, the designer merely executes the endless results of A/B testings and optimisation funnels. Design basically disappears and dissolves into modular templates. Both human sides of the interface – usage and production – become mere functions of the apparatus, generating data and executing data-driven design decisions. While this approach is still very much in play and still generates increasing revenues for the big platforms, their mechanisms are no longer a secret. And once they are revealed, their effects are slowly rendered ineffective – as with any magic spell. And there is a tendency that at least some part of us users becomes aware of the conditions and contingencies of the apparatuses around us. Which gives us the chance to rediscover the human factor in the interface. However, we have to consider that, among other things, the human is – and always has been – a political and ideo- logical tool. The human is – to rephrase Giorgio Agamben – not an event that has been completed once and for all, but an occurrence that is always under way.15 It has been used to humanise and dehumanise, to justify hierarchies and exclusion. Or – as it is done today – to turn business practices into corporate prophecies.16 Referring to a human nature, or an evolutionary human destiny even, seems like the last resort of a tech ecosystem slowly realising its hubris. This specific view of the human as something to be reformed through technology drives both the protagonists and antagonists of the tech sector. The so-called tech humanism or transhumanism, which is currently receiving widespread recognition through prominent entrepreneurs turned saviours of humanity and having second thoughts about their unicorn past, derives from the same notion of the human as universal man:17 the perfect user, who aligns intentional technology and selfmastery, using the phone as a body-tool, combining wellness culture with self-quantification, just as Silicon Valley amalgamated military research with the subculture countermovement into one coherent Californian Ideology.18 And of course they all still meet at Burning Man for some quality screen-free time. These ideological and esoteric underpinnings of technological progressivism are more visible now than they were five years ago. Not everything is within reach, not everything can be put into the cloud, not everything gets better when it is connected, the world is not as whole as the famous photograph of the “blue marble” suggests, impact and disruption is not a value in itself. The question arises: what was the Silicon Valley?19 And while some of the founding fathers of the Californian Ideology are still alive, we witness critical retrospectives,20 musealisations and the shattering and tragic downfall of tech stars.21 Corporate techno-utopias become shallow, as their inherent paradoxes and contradictions become more and more obvious. Numerous interconnected phenomena in different domains add to this situation. On the interface level we witness the incapacitation of the designer through data-driven conversion funnel optimisation, leading to horrible but economically efficient websites and services. On the consumer side, we monitor elevated usage conventions regarding social media and other digital means of communication, undermining intended-use cases and posing threats to liability. In technology development we see decreasing innovation in consumerfacing technologies, most visible in the saturated global smartphone penetration. In the investment domain we have record-breaking IPOs by non-profitable businesses22 and the domination of innovation through big platforms that are older than a decade, hoovering up or copying all innovation.23 In the business model domain, we see rising problems of advertising-based business models and related ad-fraud.24 In the political domain we experience the vulnerability of democratic processes through micro-targeting, the automatic promotion of highly engaging extremist content through self-learning algorithms25 and the critical examination of monopolisation effects of major platforms, with harsher regulations on the horizon.26 But technology of course still continues to navigate the human. Suggestions on where to go, what to do and what to watch, either made by looking at the stars or by following data-driven recommendations from the clouds, all add to the same attractive promise: a light and effortless being in the world. Technology’s expansion of human capacities and bodily functions, its most important promise in the last couple of thousand years, is now joined by the promise of the expansion of mental capabilities, delegating orientation and decision-making to a technological surrounding, saturated with data from our very own behaviour. If we look at the history of interfaces, of design and of technology in general, it becomes clear: technology is genuinely fluid. It morphs and curves itself into novel usages and shapes social grammars. It is constantly de-scripted and re-scripted by social use, with endless processes of appropriation, translation and adaptive innovation. And it is obviously inseparable from the human. After all, it might very well be what makes us human (or post-human, for that matter) – we have always been cyborgs.27 And just as the human is always under way, technology remains ingrained in every step and every shape. Fortunately, both are never quite what they claim to be.28 As Vilém Flusser provocatively stated: “We can design our tools in such a way that they affect us in intended ways.”29 Rather than be integrated as a systemic element in functionalistic interface paradigms, the human factor in technology should be conceptualised as a resistant momentum of subjectification, of that which remains unknown. How can we design interfaces that are open to this unknown, that create openness and opportunities for self-realisation and autonomous authorship? How can interfaces enable diversity, heterogeneity and difference? How can we conceive of the user and usage as the unknown, the unfinished, the infinite? If technology does indeed have theological dimensions,30 maybe the designers, producers, developers and users should not focus so much on unity, cult and following, but rather on the infinite and the unknown. They should focus on that which is the basic foundation of all religion and mysticism – and apparently also of technology: the transcendence of the human.31 Starting from there, let’s try to rethink what it means to navigate the human. It might have a lot to do with infinity and openness, and not so much with predictive algorithms, satellite imagery and patronising affordances.

ACKNOWLEDGEMENTS

One of the main motivations for this journal is the facilitation of an interdisciplinary platform, bridging gaps between arts, sciences and technology. We initiate dialogues about genealogies, current states and possible futures of apparatuses and applications. We are convinced that the complexity of our technological surroundings requires a variety of perspectives. Such perspectives are not only directed forward, but are also engaged undawith the past, reconstructing alternative histories of man−machine relations, which then, again, can be projected as multifarious future possibilities.32 We are proud to contribute to a discourse that is currently gaining traction. A traction that can be observed in the rising number of workshops, conferences, exhibitions and publications on topics related to Interface Critique. To include as many perspectives as possible, we have thus integrated numerous new formats: the single topic special section presents the results of the workshop “Interfaces and the Post-Industrial Society”, which was part of the annual conference of the German Society for Media Studies. Furthermore, we have included a series of explorative photographs from the archive of the Berlin-based artist Armin Linke, dealing with technological surfaces of interaction and control. We also introduce alternative forms of textual contributions, such as reports on individual artistic practices (Darsha Hewitt, Mari Matsutoya & Laurel Halo) and interviews (a conversation between Katriona Beales and William Tunstall-Pedoe). This second volume of Interface Critique would not have been possible without a variety of supporters, both individuals and institutions. We are indebted to Frieder Nake for his permission to translate and republish an article for Kursbuch from 1984. In this context, we would further like to thank Mari Matsutoya for the translation of Frieder Nake’s text as well as the Centre for Art and Media Karlsruhe (ZKM), especially Margit Rosen, for the funding of the translation. For the permission to republish Mari Matsutoya’s and Laurel Halo’s text we are thankful to the authors and artists involved as well as to the editors of After Us, where the text was first published in 2017. We also thank Filipa Cordeiro for the permission to republish her text, which first appeared in Wrong Wrong Magazine in 2015. We are indebted to Armin Linke for providing us access to his archive and for his permission to use a hand-picked series of his photographs for this issue. Our gratitude goes to AG Interfaces, a group of the German Society for Media Studies (GfM), which contributed the results of their workshop “Interfaces and the Post-Industrial Society”. We thank Katriona Beales, William Tunstall-Pedoe and Irini Papadimitrou for the permission to republish a conversation along with the accompanying artwork. We also thank Anthony Masure for the translation of his text “Manifeste pour un design acentré” into English. We are indebted to Alexander Schindler, who supported us generously with an InDesign template suited to our needs, thereby significantly lightening the editorial process. We gratefully acknowledge the Centre for Interdisciplinary Methods (CIM) at Warwick University, especially Nathaniel Tkacz and Michael Dieter, for facilitating a fruitful workshop on Interface Criticism, generating valuable contacts and conversations. A special thanks goes to our publisher, arthistoricum.net, and the Heidelberg University Press, especially Frank Krabbes, Daniela Jakob, Anja Konopka and everyone else who has been involved, for their ongoing generous support and access to the Open Journal System. And we thank Olia Lialina, Martin Fritz and the Merz Akademie Stuttgart for their financial support of this volume and their invitation in 2018 to present our project at the Stadtbibliothek Stuttgart. Last but not least we thank all authors – your work is the core of this project and this journal would obviously not be possible without you.

Looking forward to the next volume.

– Berlin, October 2019

References

Agamben, Giorgio, The Open: Man and Animal (Stanford: Stanford University Press, 2003).

Barbrook, Richard, and Andy Cameron, The Californian Ideology. Science as Culture 6/1 (1996), pp. 44–72.

Benjamin, Walter, The work of art in the age of mechanical reproduction, in: Illuminations, ed. Hannah Arendt (New York: Schocken Books, 1969).

Browne, R. C., H. D. Darcus, C. G. Roberts, R. Conrad, O. G. Edholm, W. E. Hick, W. F. Floyd, G. M. Morant, H. Mound, K. F. H. Murrell and T. P. Randle, Ergonomics Research Society. British Medical Journal 1/4660 (1950), p. 1009.

Colomina, Beatriz, and Mark Wigley, Are We Human? Notes on an Archaeology of Design (Baden: Lars Müller, 2017).

Cusumano, Michael A., Annabelle Gawer and David B. Yoffie, The Business of Platforms: Strategy in the Age of Digital Competition, Innovation, and Power (New York 2019).

Diederichsen, Diedrich, and Anselm Franke (eds.), The Whole Earth. California and the Disappearance of the Outside (Berlin: Sternberg Press, 2013).

Fisher, Max and Taub, Amanda, How YouTube Radicalized Brazil, The New York Times (August 2019) https://www.nytimes.com/2019/08/11/world/americas/youtube-brazil.html, access: October 10, 2019.

Flusser, Vilém, Vom Rückschlag der Werkzeuge auf das Bewusstsein (undated manuscript, Vilém Flusser Archive, document no. 2586).

Fogg, B.J., Persuasive technology: using computers to change what we think and do. Ubiquity Vol. 2002 Issue December (2002), pp. 89–120. DOI 10.1145/764008.763957

Galloway, Scott, WeWTF, Part Deux (September 2019), https://www.profgalloway.com/wewtf-part-deux, access: October 8, 2019.

Haupt, Joachim, Facebook Futures: Mark Zuckerbergs Discursive Construction of a better World. New Media and Society, in print.

Hayles, N. Katherine, How We Became Posthuman. Virtual Bodies in Cybernetics, Literature, and Informatics (Chicago: University of Chicago Press, 1999).

Hughes, Chris, It’s Time to break up Facebook. The New York Times (May 2019), https://www.nytimes.com/2019/05/09/opinion/sunday/chris-hughes-facebook-zuckerberg.html, access: October 8, 2019.

Kay, Alan C., User Interface. A Personal View, in: multiMEDIA. From Wagner to virtual reality, eds. Randall Packer and Ken Jordan (New York: W. W. Norton & Co., 2001), pp. 121–131.

Lacey, Cherie, Catherine Caudwell and Alex Beattie, The Perfect User. Digital wellness movements insist there is a single way to “stay human”. Real Life Magazine (September 2019), https://reallifemag.com/the-perfect-user/, access: October 8, 2019.

Liat, Berdugo, The Halos of Devices: The Neo-Nimbus of Electronic Objects (February 2019), http://networkcultures.org/longform/2019/02/21/the-halos-of-devices-the-neo-nimbus-of-electronic-objects/, access: October 8, 2019.

Mathur, Arunesh et al., Dark Patterns at Scale: Findings from a Crawl of 11K Shopping Websites. Proc. ACM Hum.-Comput. Interact. 3, CSCW, Article 81 (2019).

Moles, Abraham A., Informationstheorie und ästhetische Wahrnehmung (Cologne: M. DuMont Schauberg, 1971).

Motion for preliminary approval and notice of settlement, Case No. 4:16-cv-06232-JSW, filed on October 4, 2019, at the United States District Court for the Northern District of California, Oakland Division, available here: https://www.documentcloud.org/documents/6455498-Facebooksettlement.html, access: October 10, 2019.

Murrell, K. F. Hywel, Ergonomics. Man in his Working Environment (London and New York: Chapman & Hall, 1986).

Noble, David F., The Religion of Technology. The Divinity of Man and the Spirit of Invention (New York et al.: Penguin Books, 1999).

Stevens, Matt, Elizabeth Warren on Breaking Up Big Tech, The New York Times (June 2019). https://www.nytimes.com/2019/06/26/us/politics/elizabeth-warren-break-up-amazon-facebook.html, access: October 10, 2019.

Tkacz, Nathaniel, Facebooks Libra or the end of Silicon Valley Innovation. Medium (June 2019), https://medium.com/@nathanieltkacz/facebooks-libra-or-the-end-of-silicon-valley-innovation-9cb2d1539bcd, access: October 8, 2019.

Williams, Raymond, Television. Technology and Cultural Form (London: Fontana, 1974).

Footnotes

1 Raymond Williams, Television: Technology and Cultural Form (London 1974).

2 Arunesh Mathur et al., Dark patterns at scale: findings from a crawl of 11K shopping websites. Proc. ACM Hum.-Comput. Interact. 3, CSCW, Article 81 (2019).

3 See for example the influential paper from B.J. Fogg, Persuasive technology: using computers to change what we think and do. Ubiquity (December 2002), pp. 89–120.

4 This quasi-dialectical distinction has been coined by Vilém Flusser, which he in turn adapted from Abraham A. Moles’ pioneering work on information aesthetics. Cf. Abraham A. Moles, Informationstheorie und ästhetische Wahrnehmung (Cologne 1971).

5 The influence of ARPA-funded projects (Advanced Research Projects Agency, now called DARPA = Defense Advanced Research Agency) – as the driving force for the powerful cybernetic paradigm of the following decades – on developments in human computer interaction is well documented. In fact, Alan Kay, the main protagonist of GUI development at Xerox PARC in the 1970s, discussed research in aeronautics as the direct predecessor of research on computer interfaces. Cf. Alan C. Kay, User interface. A personal view, in: multiMEDIA. From Wagner to Virtual Reality, eds. Randall Packer and Ken Jordan (New York 2001), pp. 121–131.

6 K. F. Hywel Murrell, Ergonomics. Man in His Working Environment (London and New York 1986 [1965]), p. xvi.

7 Ibid., p. xiv.

8 Ibid., p. x.

9 Ibid., p. viii.

10 In their constitution, the Ergonomics Research Society stated their mission as “the study of the relation between man and his working environment”. R. C. Browne, H. D. Darcus, C. G. Roberts, R. Conrad, O. G. Edholm, W. E. Hick, W. F. Floyd, G. M. Morant, H. Mound, K. F. H. Murrell and T. P. Randle, Ergonomics Research Society. British Medical Journal 1/4660 (1950), p. 1009. Murrell adopted this mission statement for the title of his book. It is not only a valuable source for critical studies on the history of objectification of labour, where workers or operators and technological systems constitute ever effective units. It is also a necessary reference for a genealogy of the interface.

11 Murrell, Ergonomics, p. xiv.

12 Ibid., p. xiv.

13 Ibid., p. xv.

14 Ibid.

15 “Ontology, or first philosophy, is not an innocuous academic discipline, but in every sense the fundamental operation in which anthropogenesis, the becoming human of the living being, is realized. From the beginning, metaphysics is taken up in this strategy: it concerns precisely that meta that completes and preserves the overcoming of animal physis in the direction of human history. This overcoming is not an event that has been completed once and for all, but an occurrence that is always under way, that every time and in each individual decides between the human and the animal, between nature and history, between life and death.” Giorgio Agamben, The Open: Man and Animal (Stanford 2003), p. 79.

16 Joachim Haupt, Facebook futures: Mark Zuckerberg’s discursive construction of a better world. New Media and Society, in print.

17 Cherie Lacey, Catherine Caudwell and Alex Beattie, The perfect user. Digital wellness movements insist there is a single way to “stay human”. Real Life Magazine (September 2019), https:// reallifemag.com/the-perfect-user/, acces: October 8, 2019.

18 Richard Barbrook and Andy Cameron identified, already over 20 years ago, the “contradictory mix of technological determinism and libertarian individualism” as the main ingredient of the Californian Ideology. Cf. Richard Barbrook and Andy Cameron, The Californian Ideology. Science as Culture 6/1 (1996), pp. 44–72.

19 See for example: Nathaniel Tkacz, Facebook’s Libra, Or, the End of Silicon Valley Innovation. Medium (June 2019), https://medium. com/@nathanieltkacz/facebooks-libra-or-the-end-of-siliconvalley- innovation-9cb2d1539bcd, access: October 8, 2019.

20 Such as “The Whole Earth” exhibition at HKW Berlin (April 26–July 7, 2013); catalogue: The Whole Earth. California and the Disappearance of the Outside, eds. Diedrich Diederichsen and Anselm Franke (Berlin 2013).

21 While Theranos has been the most flamboyant example in recent years, there are many more, from Uber CEO Travis Kalanick to Twitter CEO Jack Patrick Dorsey and the former WeWork CEO Adam Neumann, who stepped down after an IPO filing that put the company in turmoil. And Mark Zuckerberg is obviously getting ready for some kind of major cathartic event.

22 WeWork is just the most recent example: Scott Galloway, WeWTF, Part Deux (September 2019), https://www.profgalloway. com/wewtf-part-deux, access: October 8, 2019.

23 Michael A. Cusumano, Annabelle Gawer and David B. Yoffie, The Business of Platforms: Strategy in the Age of Digital Competition, Innovation, and Power (New York 2019).

24 See for example the recent settlement, where Facebook Inc. agreed to pay $40 million to advertisers for the knowing inflation of video view statistics by more than 900%: Motion for preliminary approval and notice of settlement, Case No. 4:16-cv-06232-JSW, filed on October 4, 2019, at the United States District Court for the Northern District of California, Oakland Division, available here: https://www.documentcloud.org/documents/6455498-Facebooksettlement.html, access: October 10, 2019.

25 See for example: Max Fisher and Amanda Taub, How YouTube Radicalized Brazil. The New York Times (August 2019) https://www.nytimes.com/2019/08/11/world/americas/youtube-brazil.html, access: October 10, 2019.

26 Chris Hughes, It’s Time to Break Up Facebook. The New York Times (May 2019) https://www.nytimes.com/2019/05/09/opinion/ sunday/chris-hughes-facebook-zuckerberg.html, access: October 8, 2019. See also: Matt Stevens, Elizabeth Warren on Breaking Up Big Tech. The New York Times (June 2019). https://www.nytimes. com/2019/06/26/us/politics/elizabeth-warren-break-up-amazonfacebook.html, access: October 10, 2019. And, of course, the recent ECJ judgements on the liabilities of social media platforms.

27 See Julia Heldt’s article as well as Laurel Halo’s and Mari Matsutoya’s reflection of their project on Hatsune Miku in this volume. One of the central publications in this discourse is How We Became Posthuman. Virtual Bodies in Cybernetics, Literature, and Informatics (Chicago 1999) by N. Katherine Hayles, who provided a paper on her current research for this volume.

28 Paraphrased from Beatriz Colomina and Mark Wigley, Are We Human? Notes on an Archaeology of Design (Baden 2017), p. 274: “Design is never quite what it claims to be. Fortunately. Its attempt to smooth over all the worries and minimize any friction always fails, in the same way that almost every minute of daily life is organized by the unsuccessful attempt to bury the unconscious.”

29 Vilém Flusser, Vom Rückschlag der Werkzeuge auf das Bewusstsein (undated manuscript, Vilém Flusser Archive, document no. 2586); translation: the authors.

30 Liat Berdugo, The Halos of Devices: The Neo-Nimbus of Electronic Objects (February 2019), http://networkcultures.org/ longform/2019/02/21/the-halos-of-devices-the-neo-nimbus-ofelectronic-objects/, access: October 8, 2019.